Frequently I am asked to report on user permissions across the enterprise. While each site collection, probably each site and possibly each library and item has its own permissions that are visible, reporting on them can’t be done in the User Interface. This little PowerShell script I wrote will output all permissions in a CSV format that’s easily opened in Excel and manipulated via a PivotTable. I even published this spreadsheet PivotTable in Excel Services allowing end users to interact and manipulate the views interactively in a browser. Here it is:

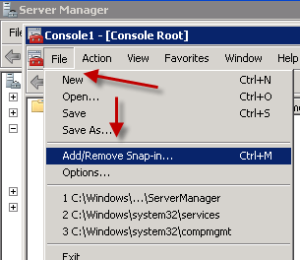

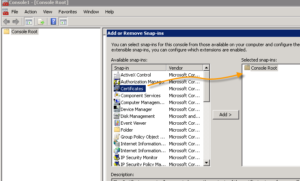

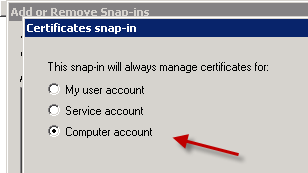

$siteCol = Get-SPSite "http://SharePoint" #replace with your own web app get-spweb -site $siteCol -Limit ALL | ForEach-Object { $i++; $j=0; $site = $_; $str1=$i.tostring()+","+$site.Title+","+$site.url+","; write-host $str1; foreach ($usr in $site.users) { $j++; $webPermissions += $str1+ $j.tostring()+ ","+ $usr.userlogin +","+$usr.displayname+","+$usr.Roles+"`r`n";} $site.Dispose(); } $webPermissions += "`r"+"`n"; $webPermissions | Out-file -Filepath L:logsJP_security.csv #I am partial to my own initials, replace with your name/path $webpermissions; $siteCol.dispose(); |