Introduction

Imagine this: you’re in a pivotal meeting, ready to make a key decision for your company. Excitement fills the room as you prepare to review the Power BI report, but suddenly, the dashboard lags—displaying outdated data.

Two common methods exist for updating Power BI data:

- Manual Refresh: Data updates occur via backend processes requiring manual intervention.

- Real-Time Integration: Data flows automatically into reports as soon as it is updated.

With Power Apps integration, the second method becomes a reality. By linking Power Apps with Power BI, you can ensure reports update dynamically, enabling real-time insights for smarter decision-making without delays.

This blog explores how to achieve seamless real-time updates in Power BI by leveraging Power Apps.

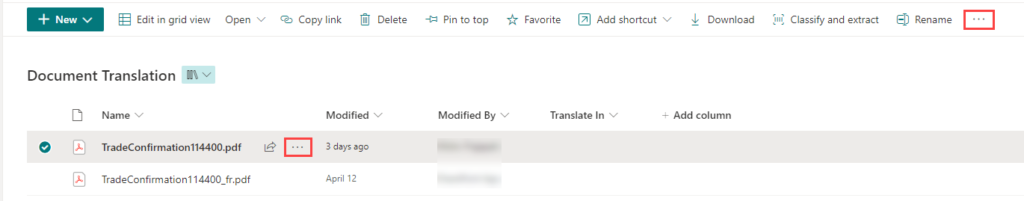

Real-Time Data Updates: A Practical Example

Picture this: as sales figures or inventory data updates in Power Apps, the corresponding Power BI reports instantly refresh—keeping decision-makers informed with the most current insights.

Here is the visual representation of Power BI and Power Apps integration:

Summary

In this blog, we will dive deep into how you can leverage Power Apps to push data updates directly to Power BI, ensuring that your report is always showing the most current and accurate information available. Let’s get started with a step-by-step guide to setting up real-time data updates between Power Apps and Power BI.

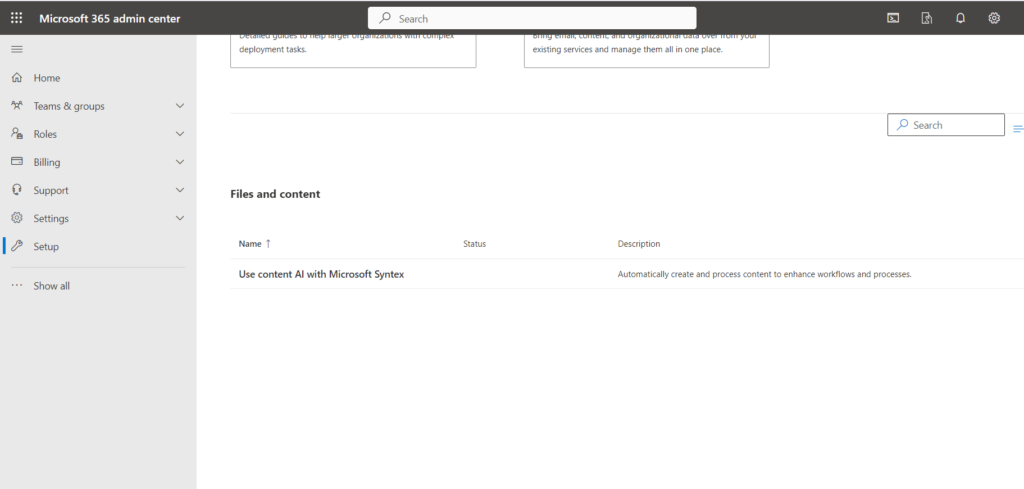

Steps to Integrate Power Apps Visual with Power BI

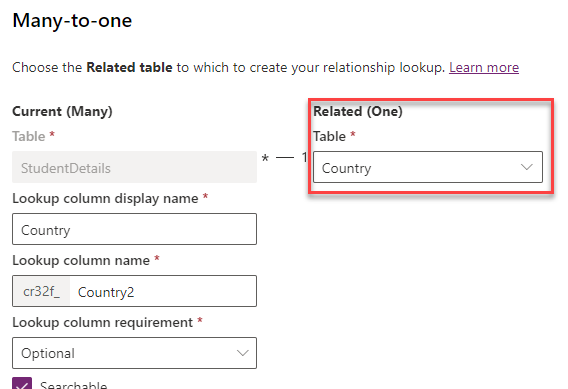

Prepare Data Source

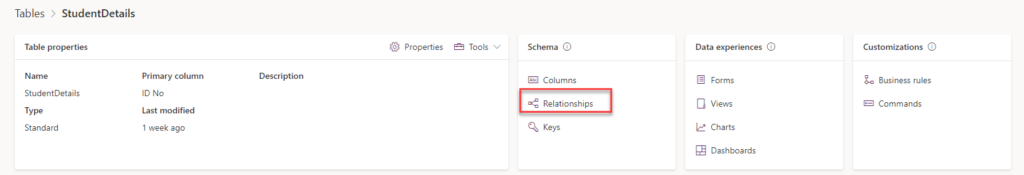

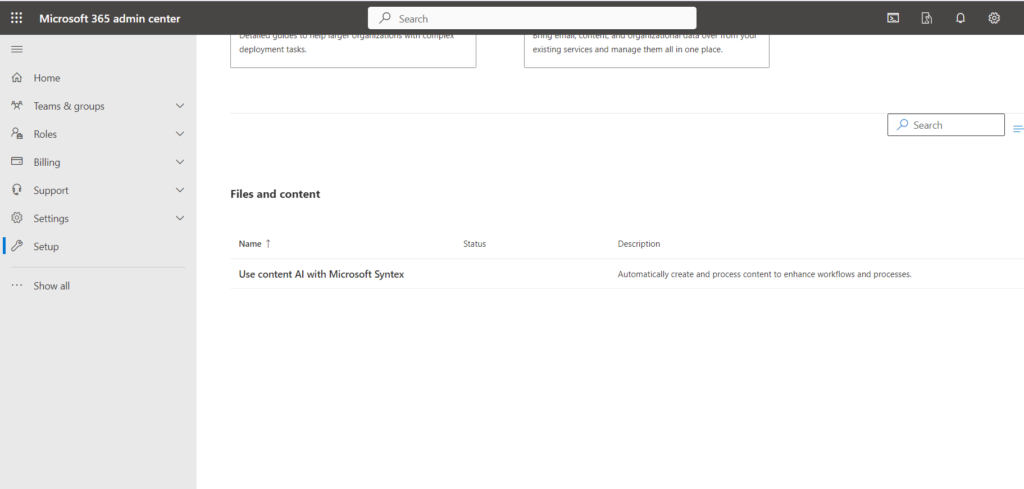

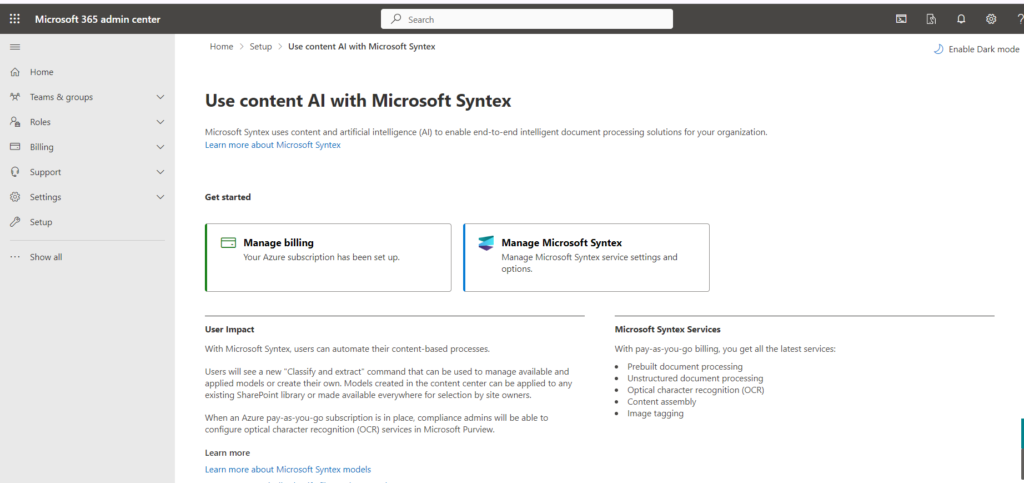

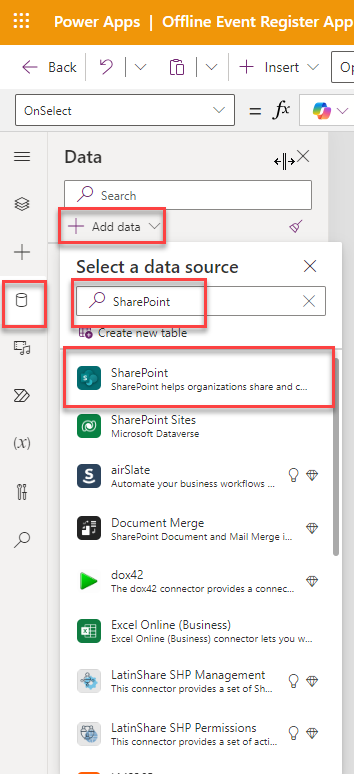

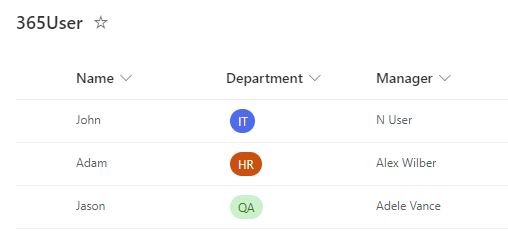

Power BI and Power Apps support multiple data sources such as SharePoint, Dataverse, and SQL databases.

Ensure that your data sources support Direct Query. For example:

- For SQL databases, enable the correct table and configure permissions.

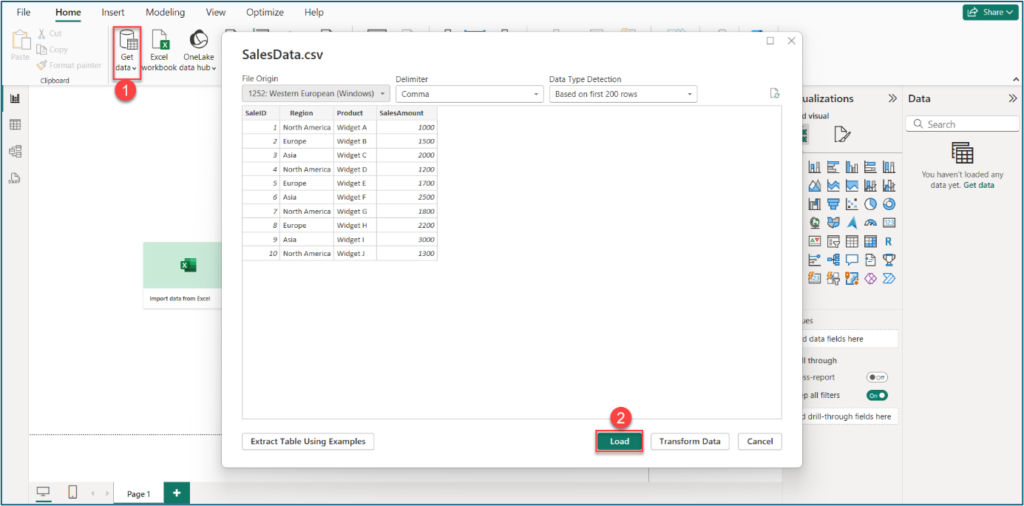

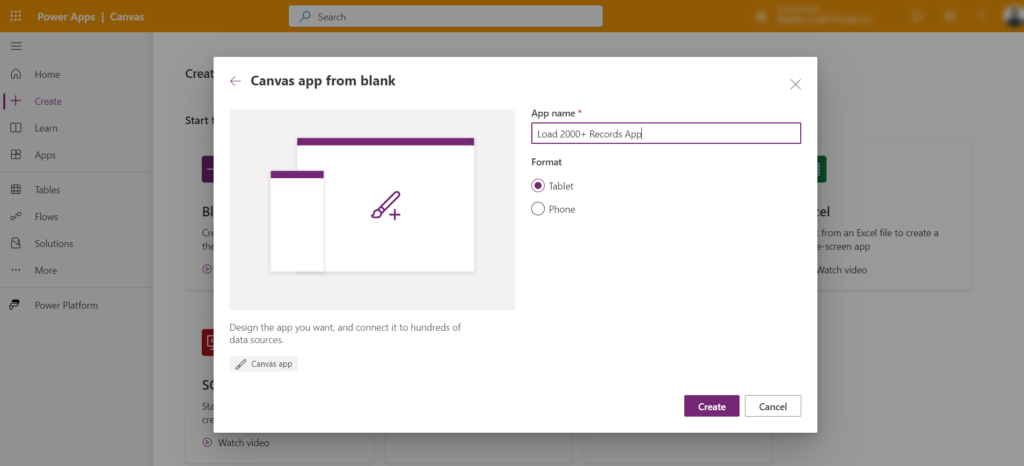

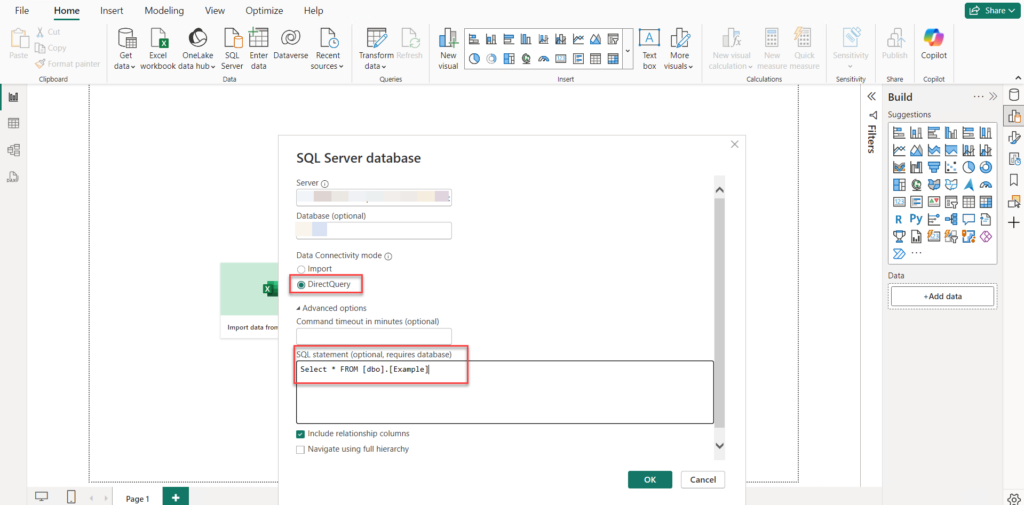

Create a Power BI Report

- Go to Power BI Desktop, click on the blank report, and create a new report.

- Choose SQL as the data source, connect to your server, and select “Direct Query” as the connectivity mode. Add your table to the report.

Why Use Direct Query?

Direct Query ensures real-time updates by querying the data source directly. This keeps your report constantly up to date, without the need for manual refreshes. Unlike the Import method, which requires scheduled refreshes, Direct Query eliminates data duplication and always reflects the latest information.

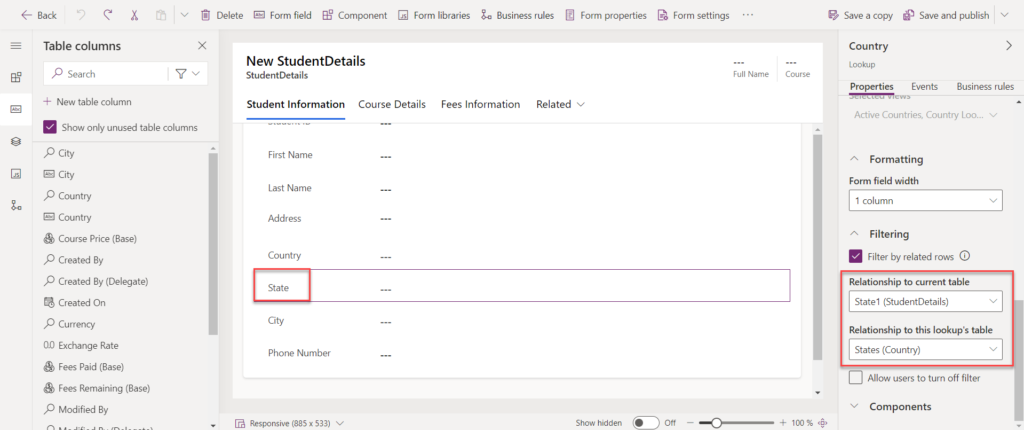

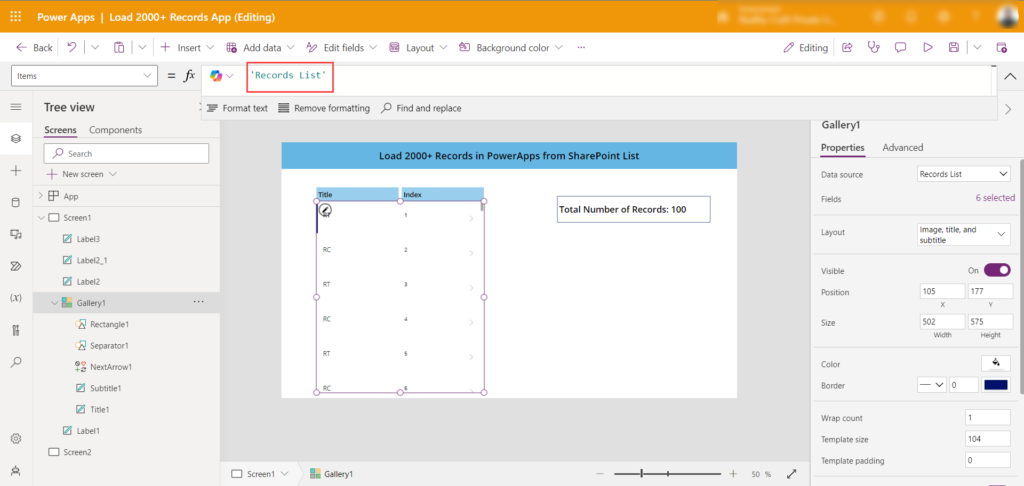

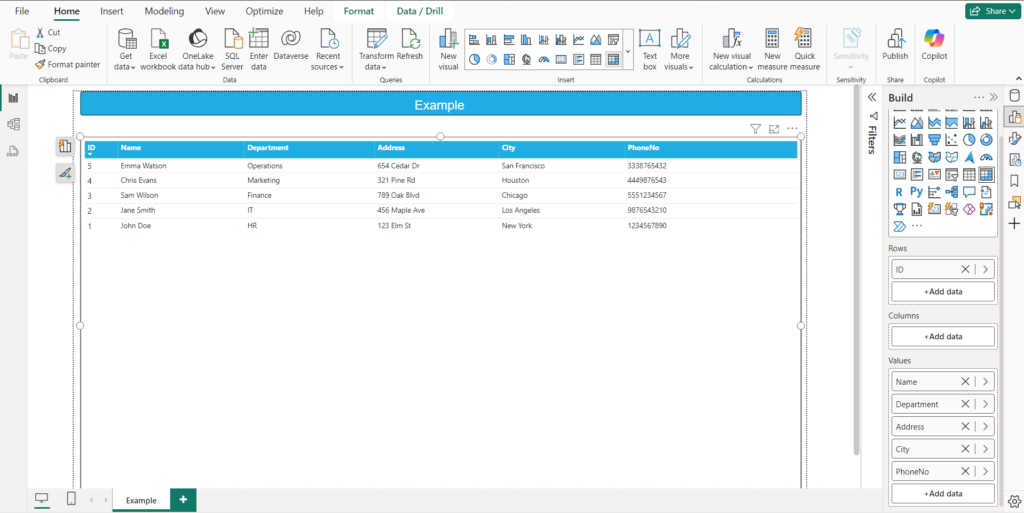

Add Visual to Power BI Report

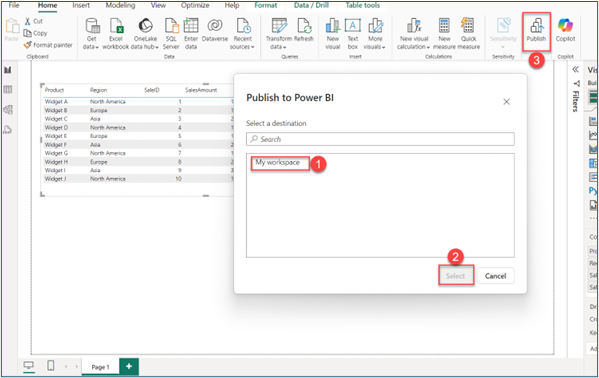

After setting up Direct Query, select the visual (e.g., matrix visual) where you want to display the data. Add the relevant columns and publish your report to the workspace.

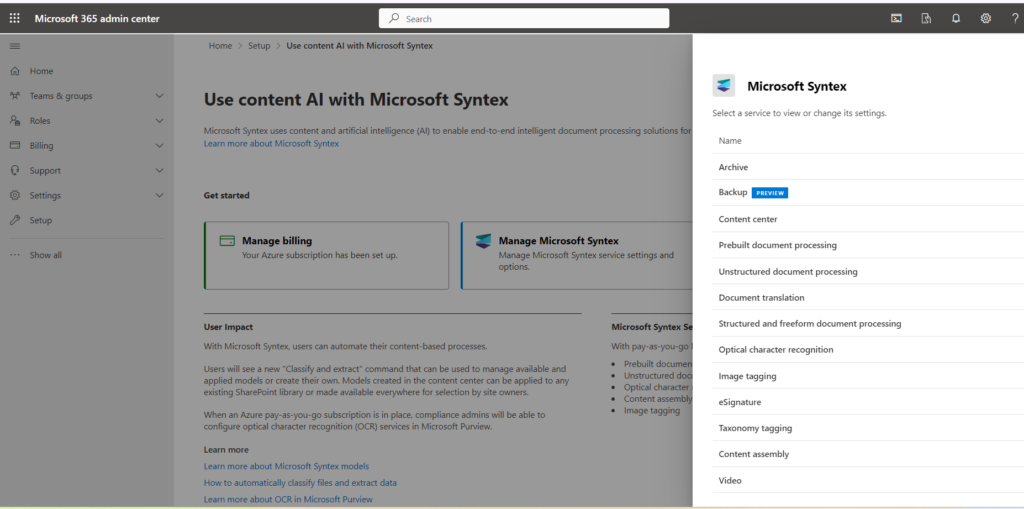

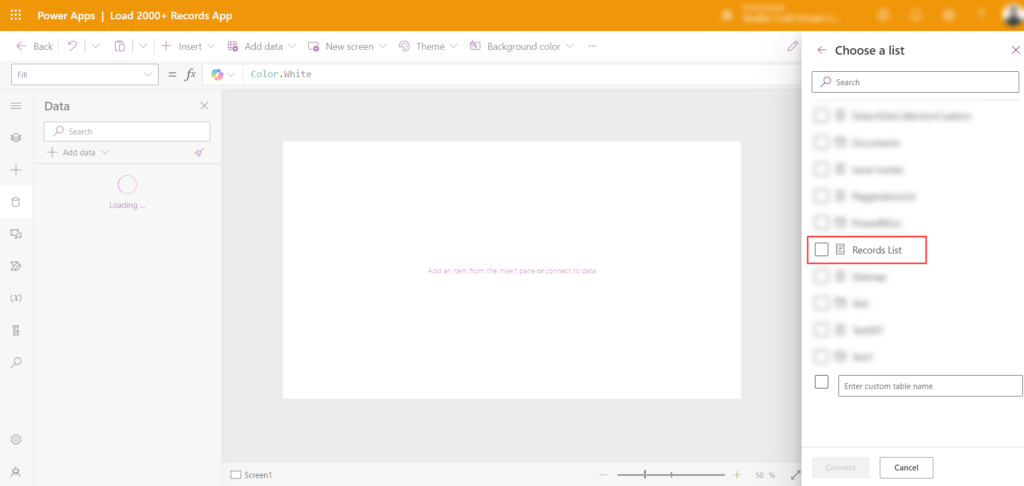

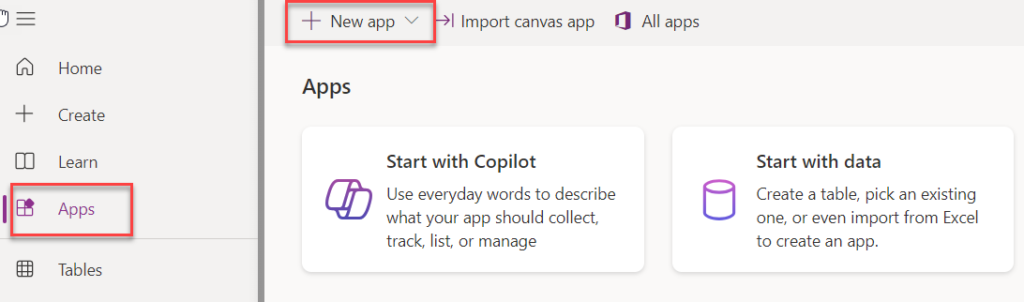

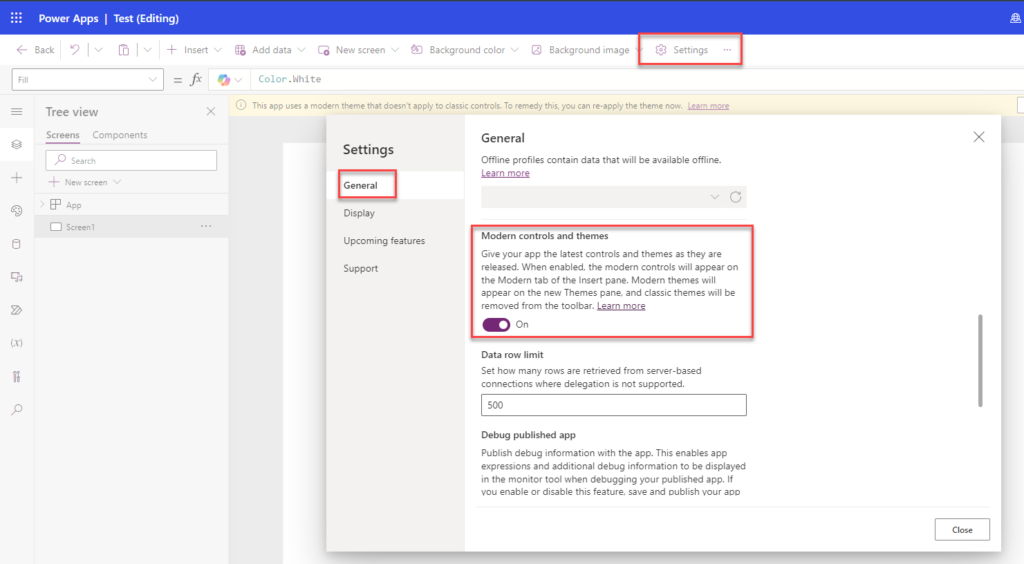

Power Apps Integration

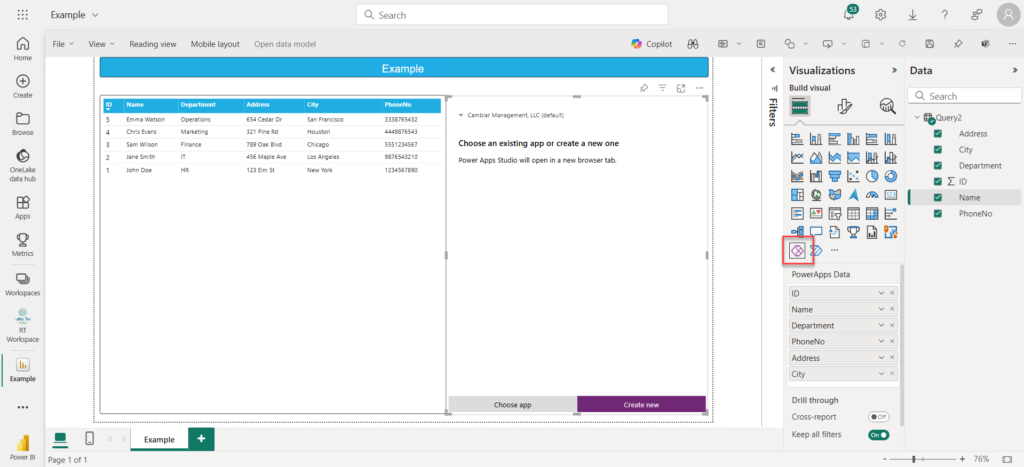

To integrate with Power Apps, use the web version of Power BI, as the desktop version has limitations in this area. You can test the integration locally in the desktop version, but to fully interact with Power Apps, you need the Power BI Service.

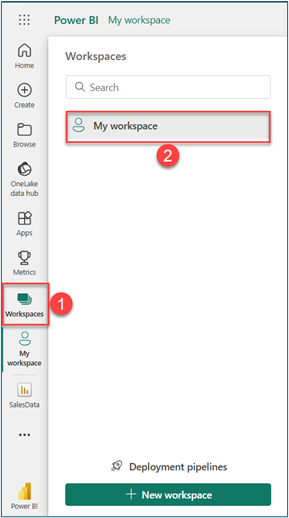

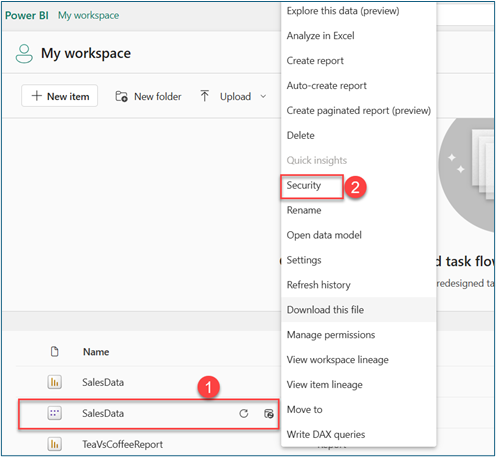

- Go to Power BI Web (“ https://app.powerbi.com/”) and navigate to your workspace.

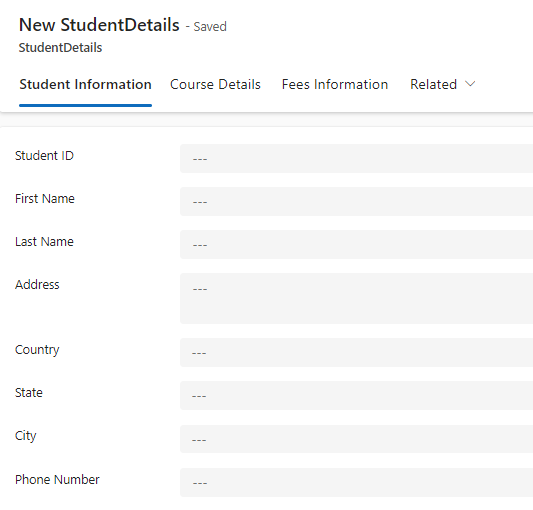

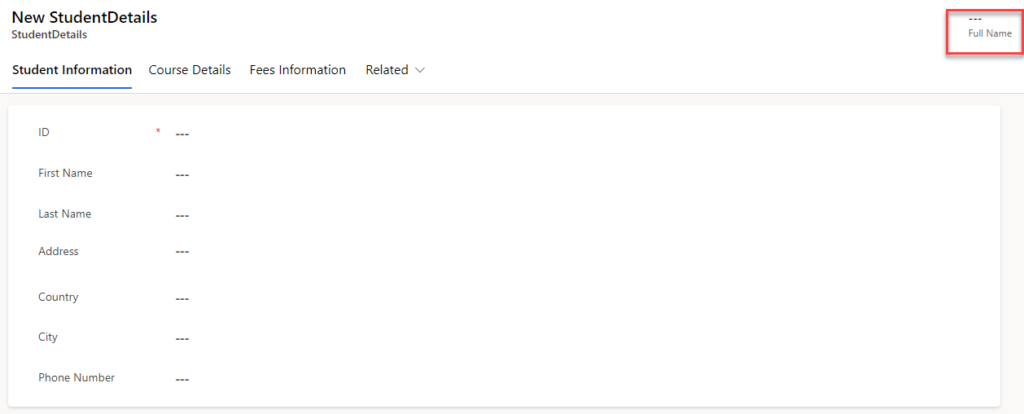

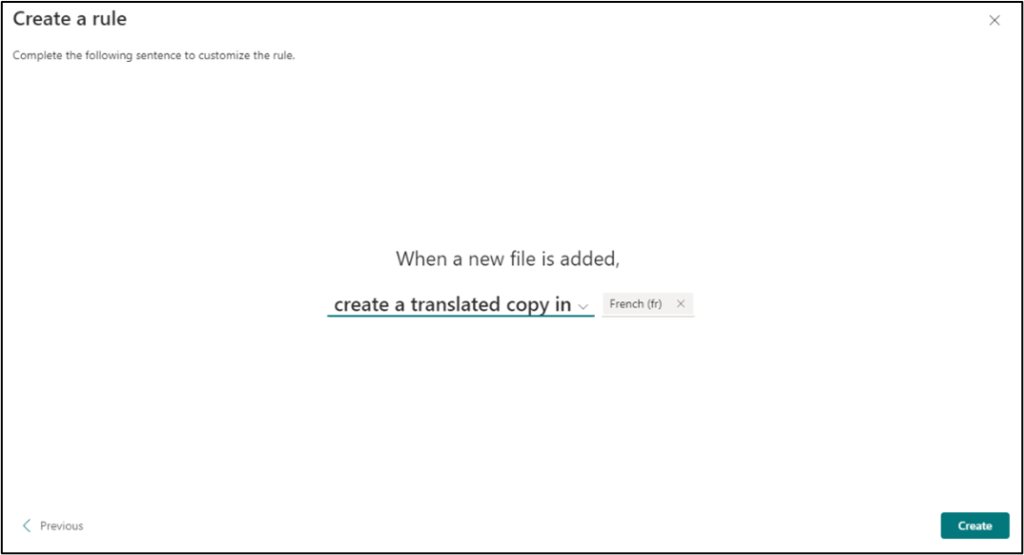

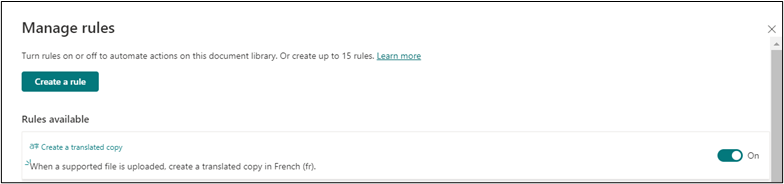

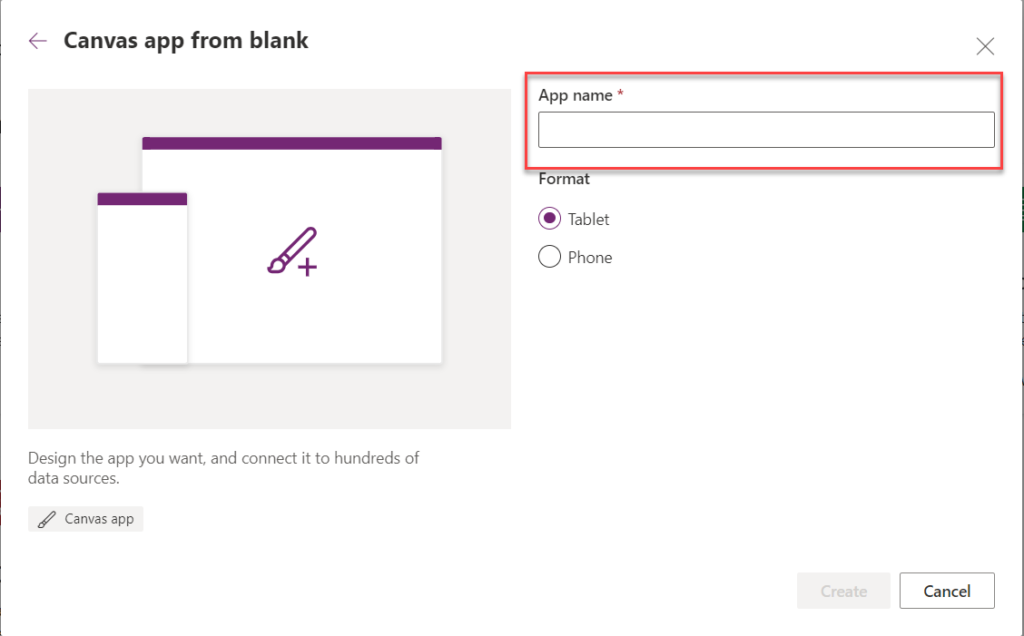

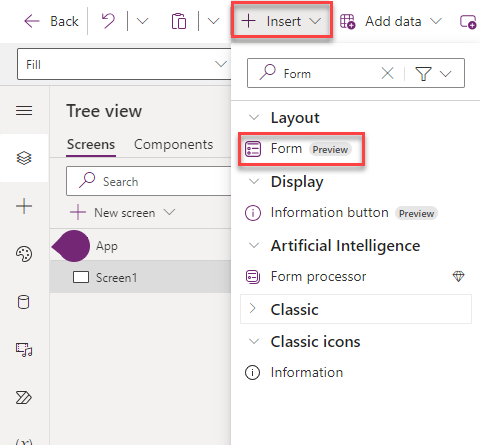

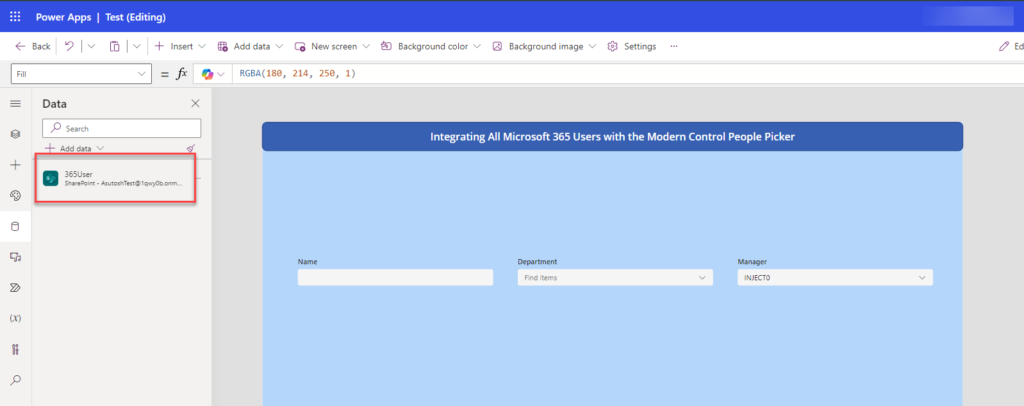

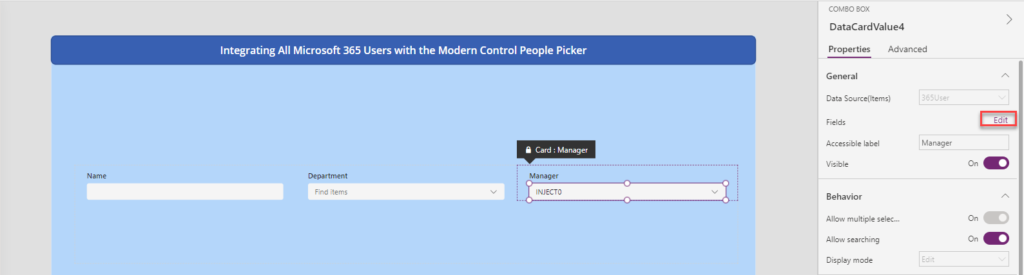

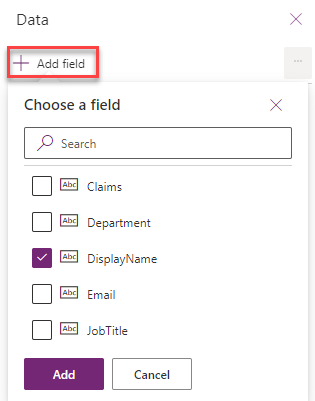

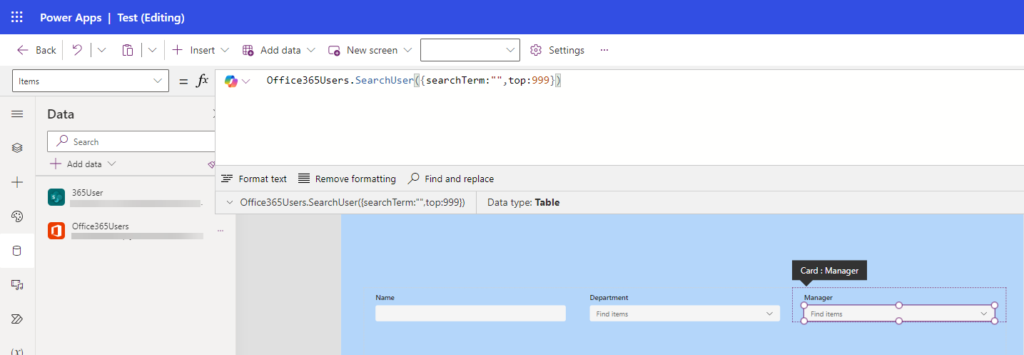

- Open the report, click on “Edit,” and add the Power Apps visual. Select the columns you want to display and click “Create New.”

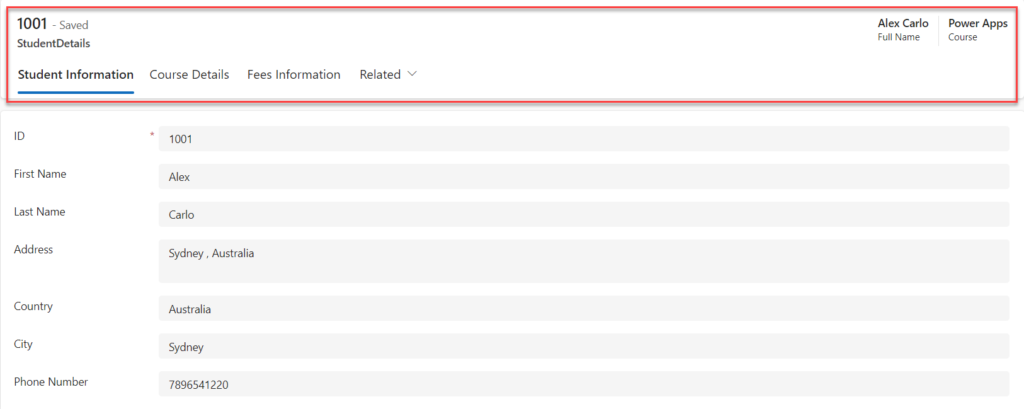

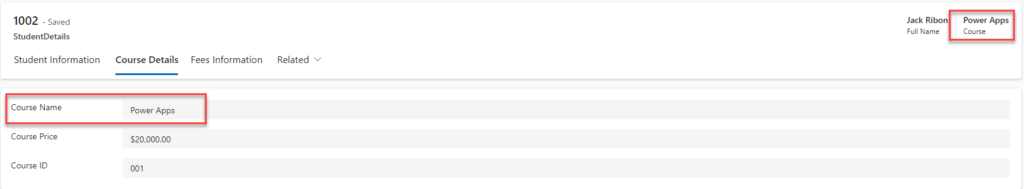

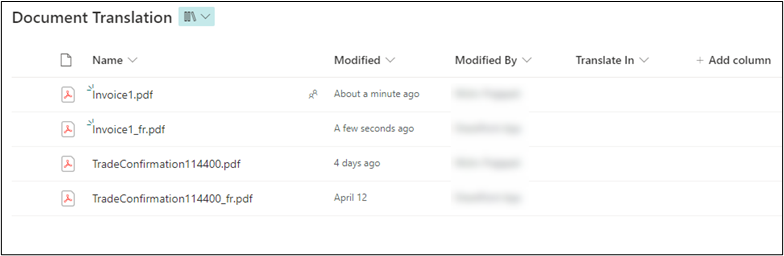

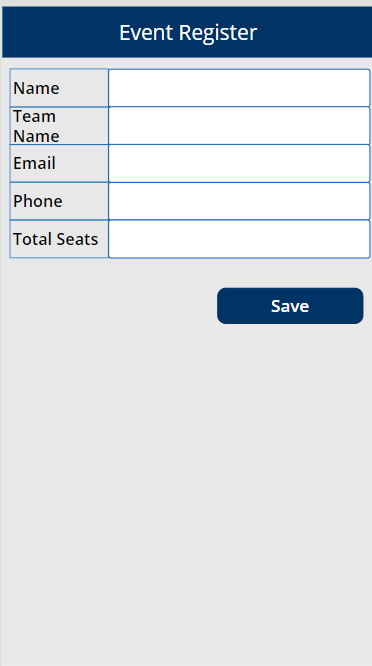

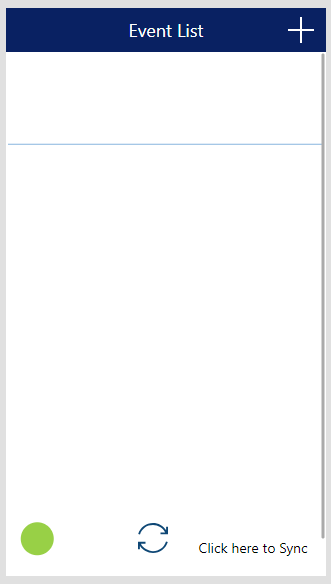

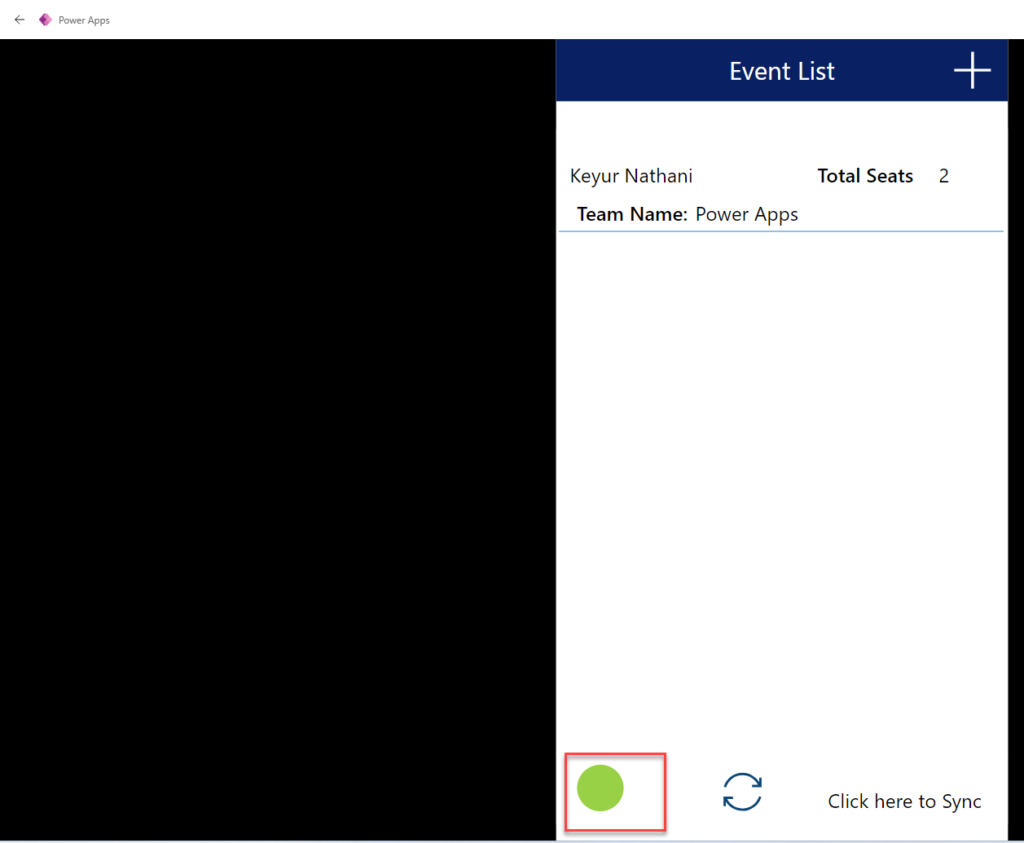

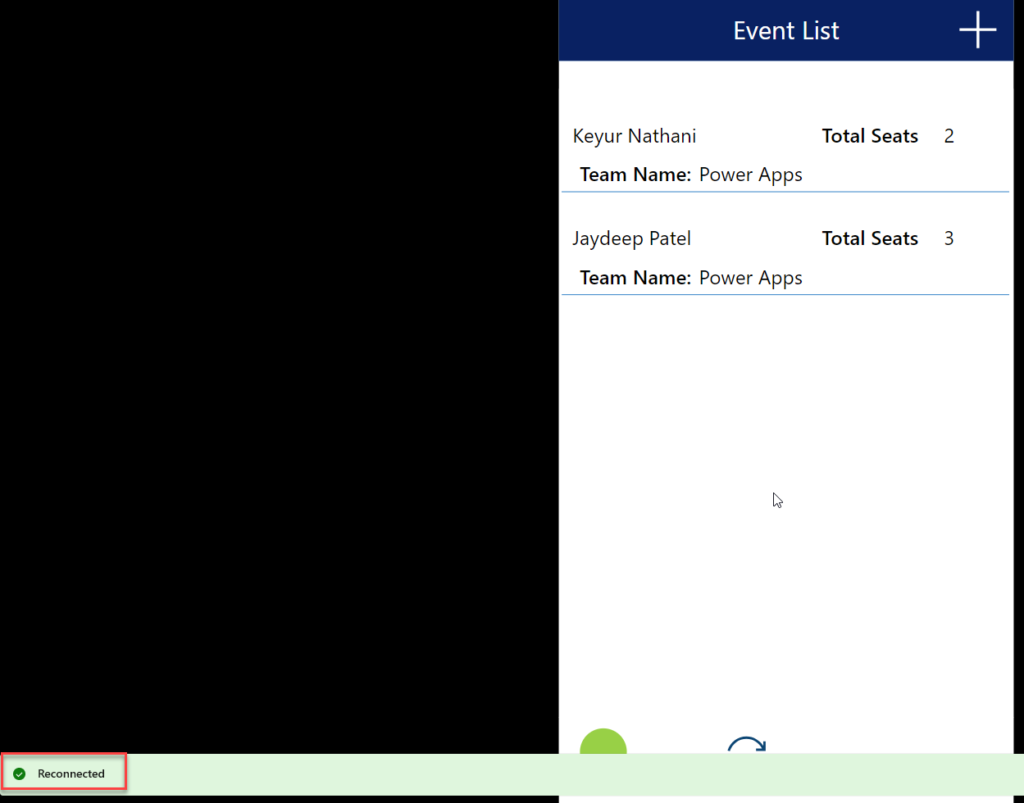

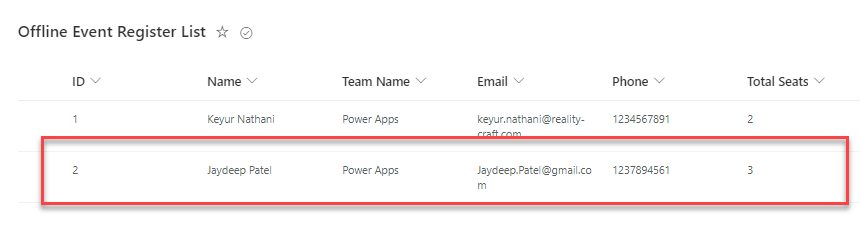

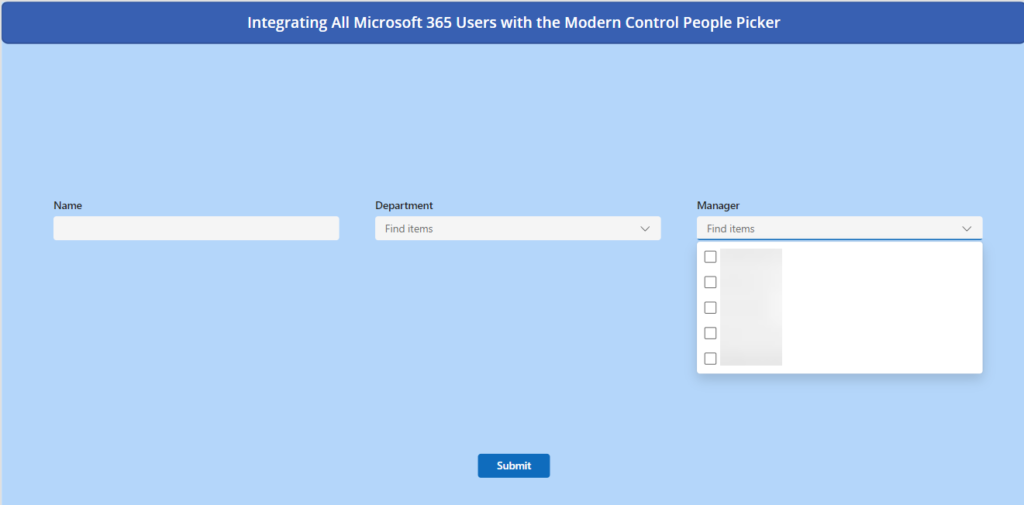

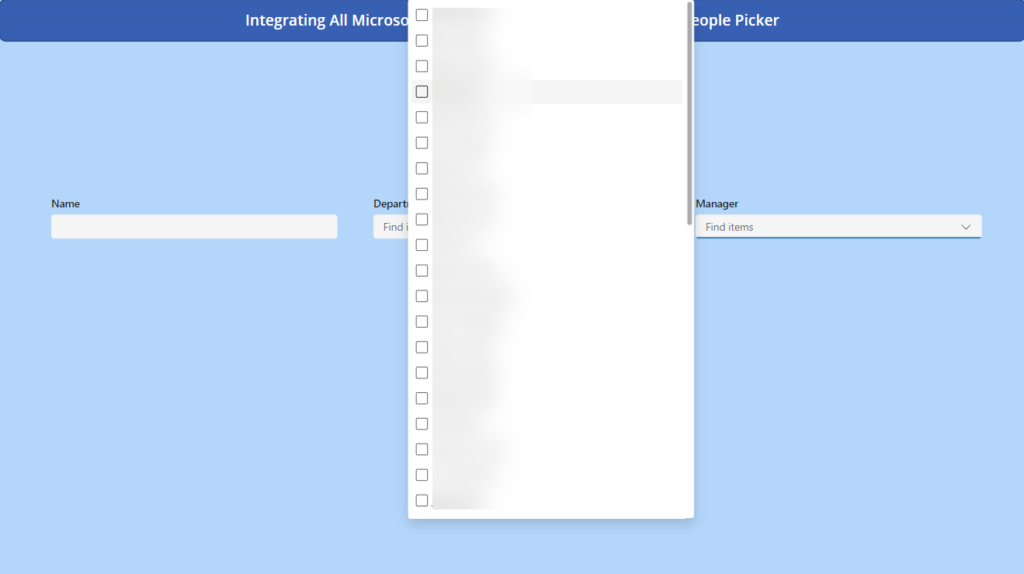

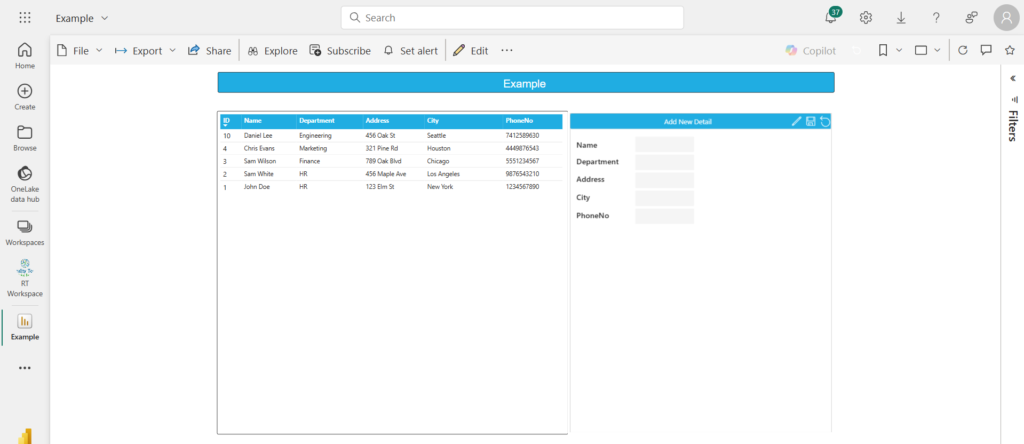

- After creating the Power Apps, you can see both the Power Apps visual and the Power BI report visual like this.

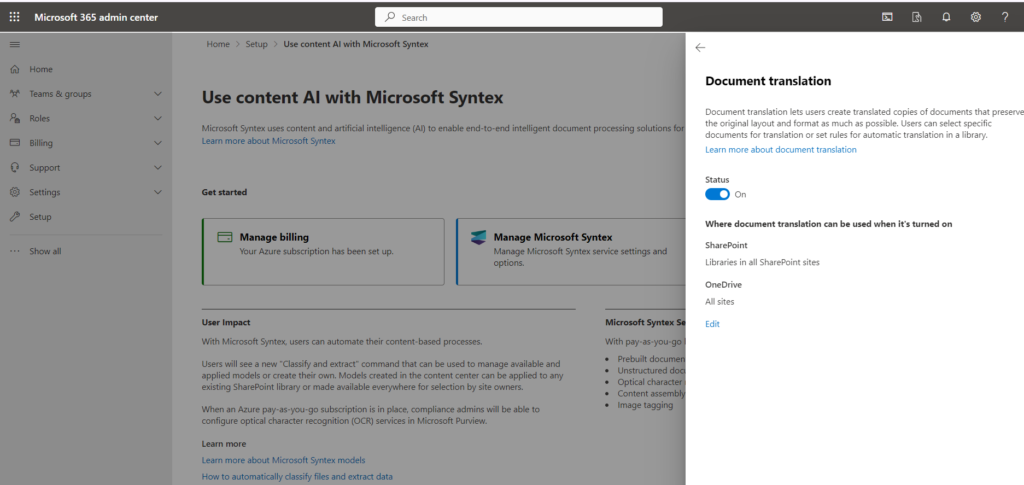

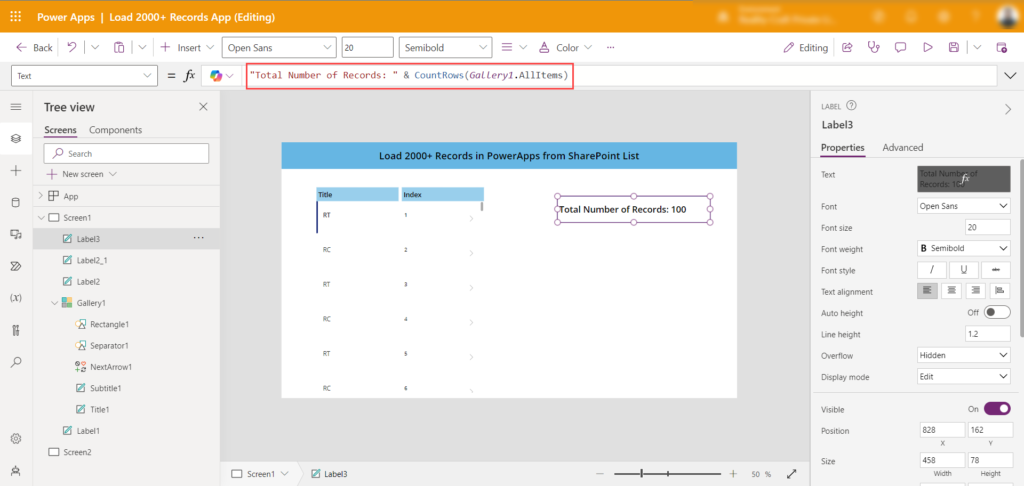

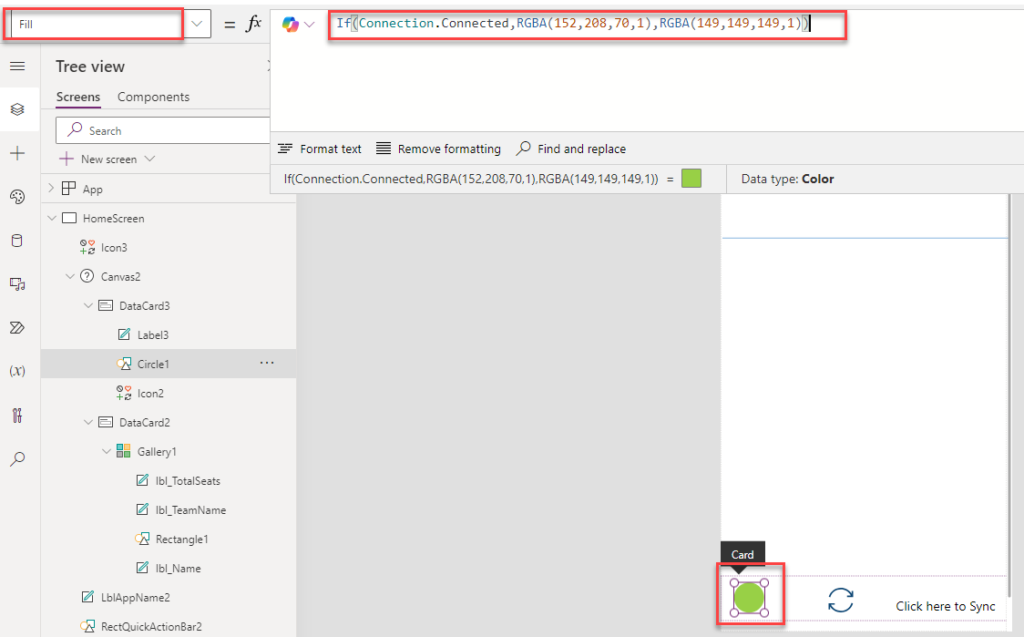

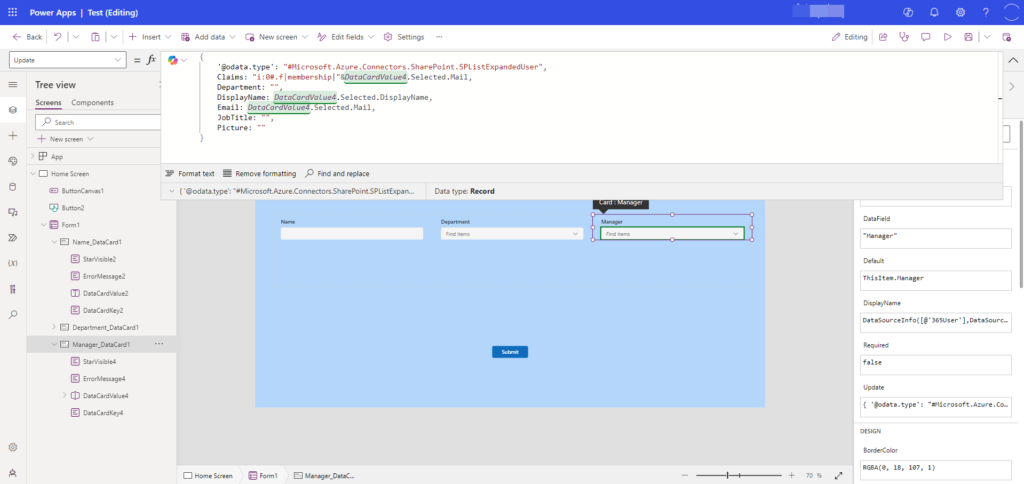

Add PowerBIIntegration.Refresh() Formula

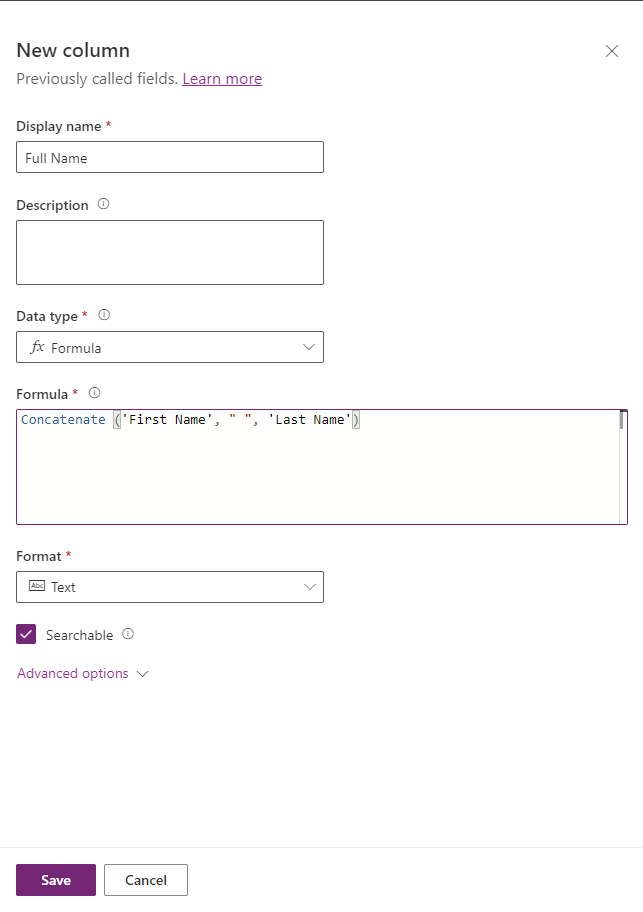

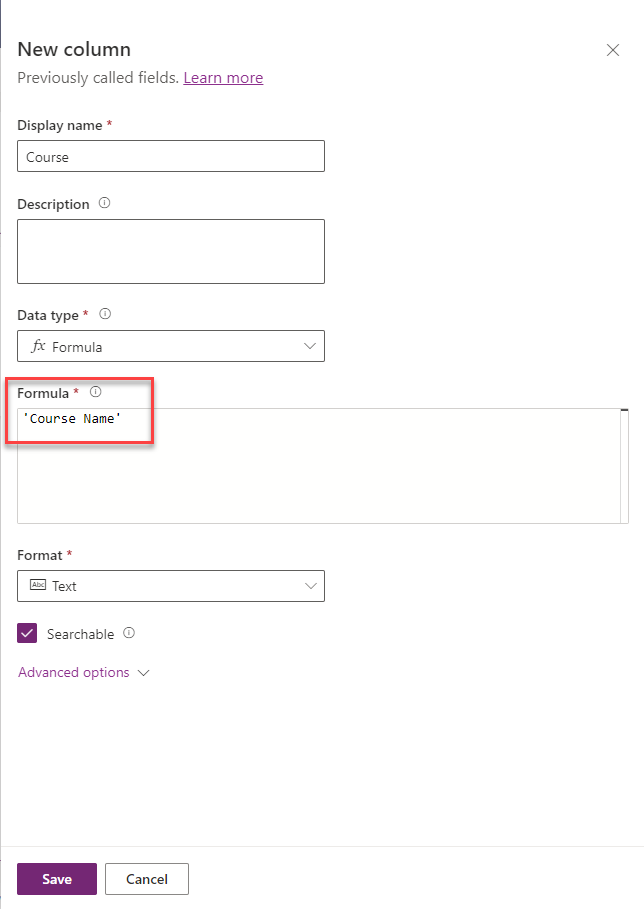

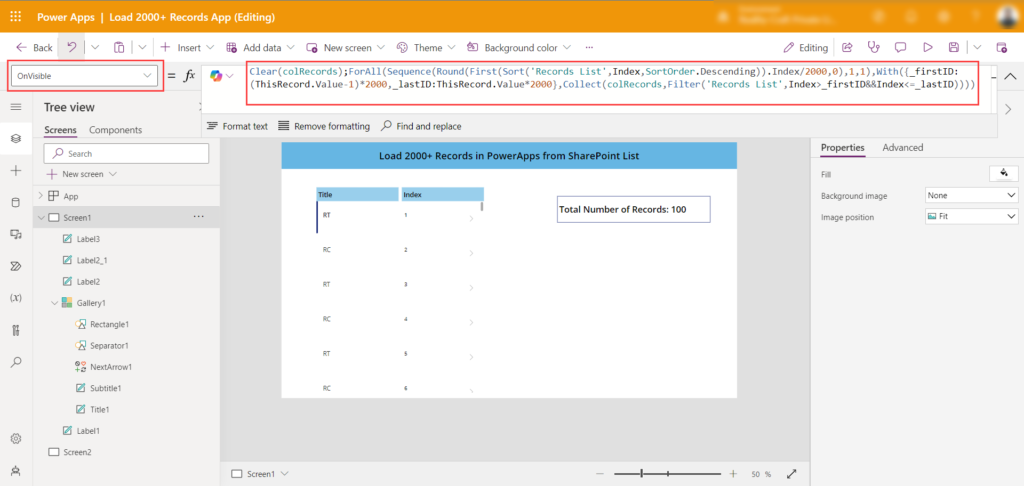

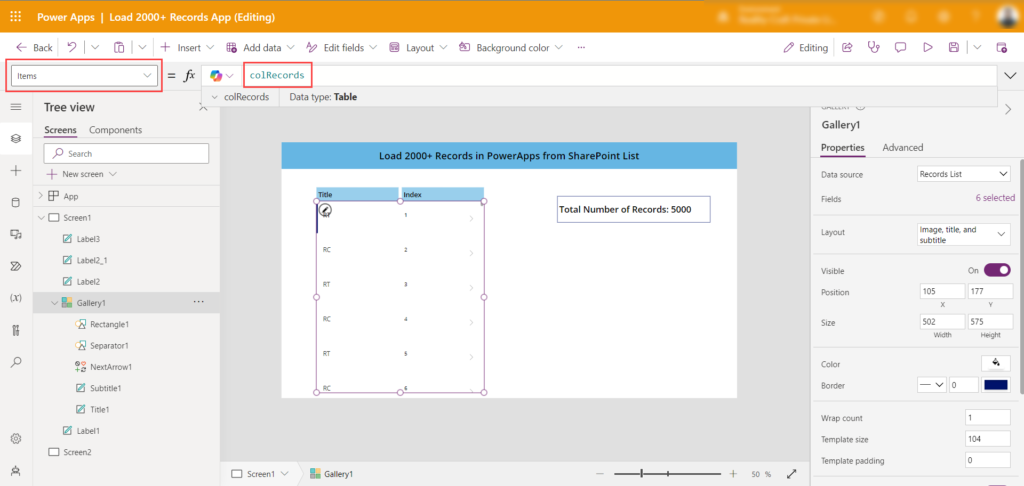

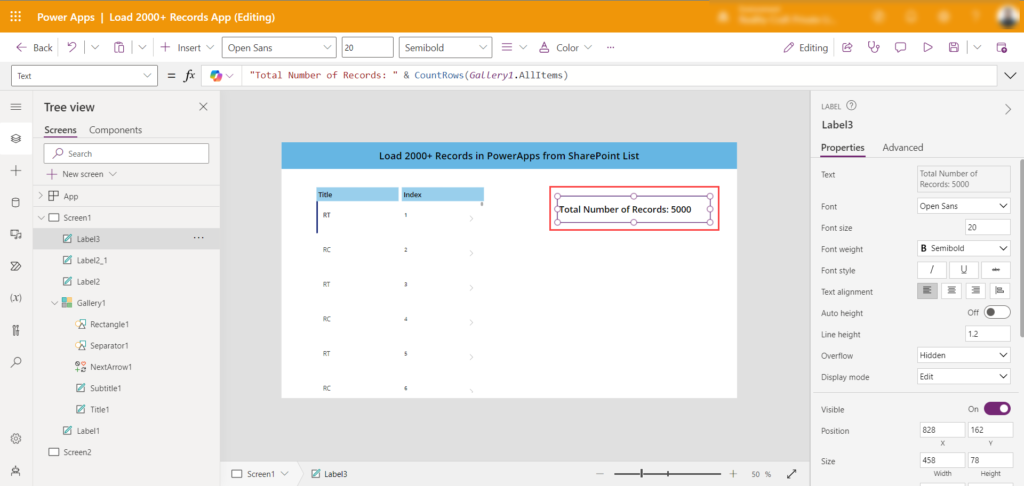

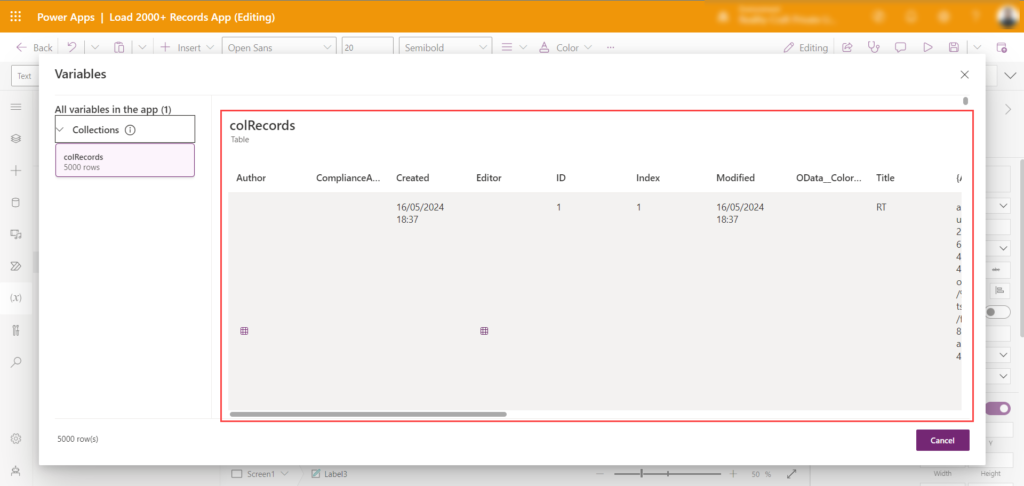

In the newly created Power App:

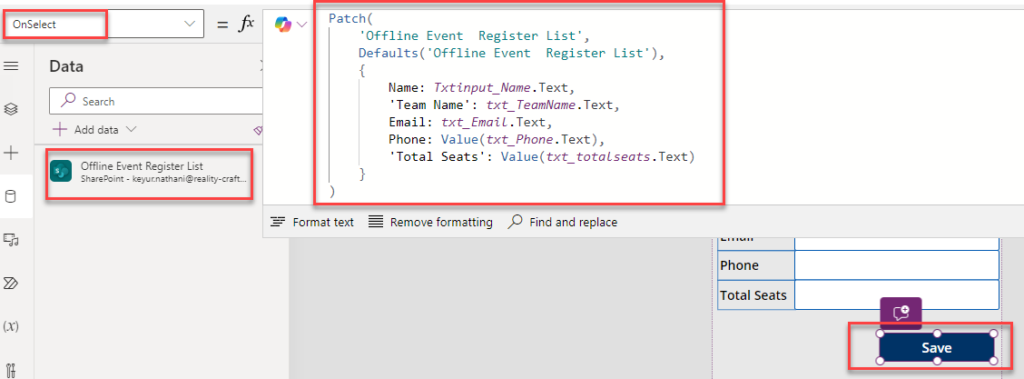

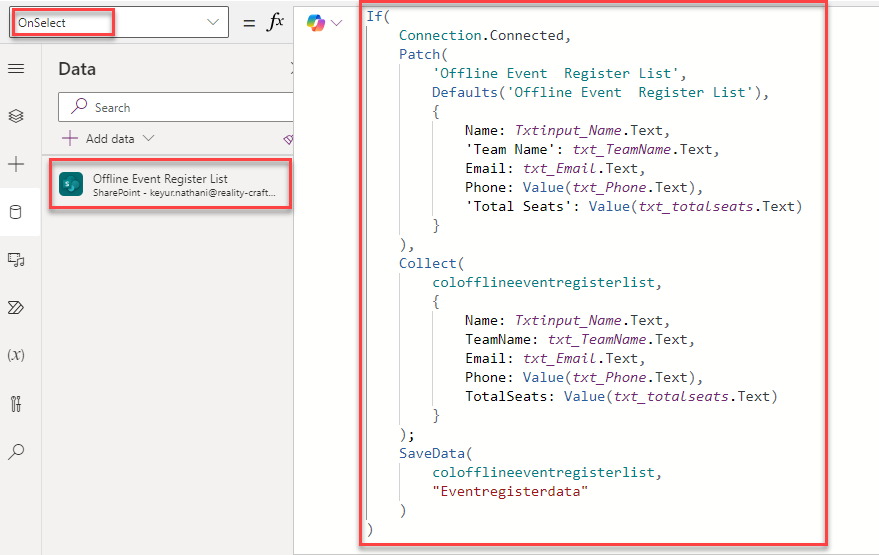

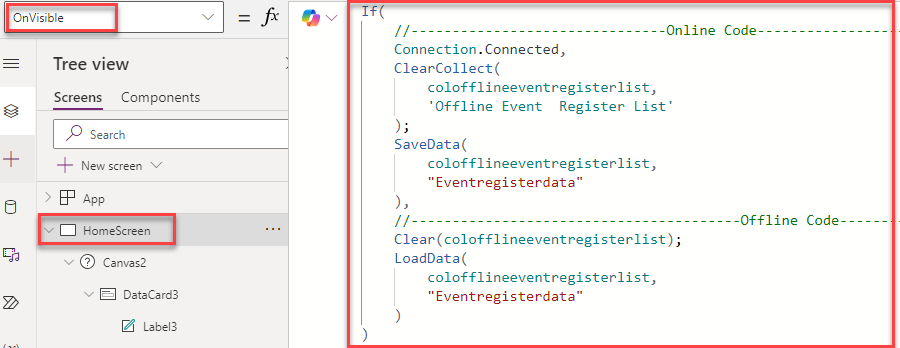

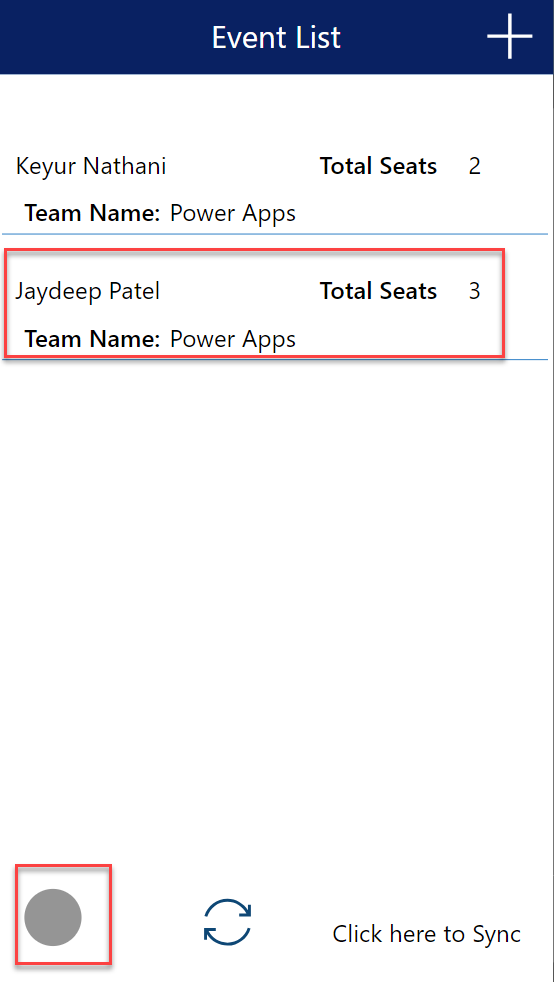

- Design the app to allow data submission, updates, or deletions.

- In your new Power App, where the data submission, update, or deletion occurs, add the following formula: Refresh()

- e.g. Use the PowerBIIntegration.Refresh() formula in the submit button’s OnSelect property to trigger real-time updates.

Note: This formula only works with newly created apps it will not work with existing ones.This formula ensures that after any data is entered, updated, or deleted in Power Apps, the Power BI visual will refresh automatically to display the most current data.

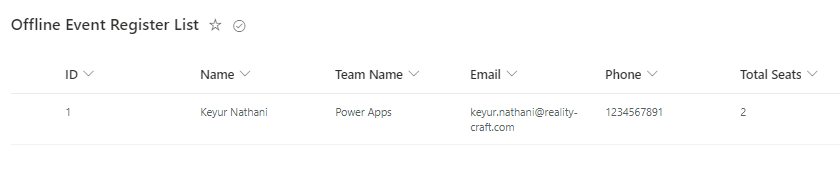

How It Works:

When data is submitted, updated, or deleted in Power Apps visual, the PowerBIIntegration.Refresh() formula triggers a refresh in Power BI. This ensures that the Power BI report instantly reflects the latest data, removing the need for manual refresh.

Conclusion:

Real-time data integration between Power Apps and Power BI transforms the way businesses operate, enabling dynamic and informed decision-making. By leveraging this seamless connection, you can eliminate delays, enhance accuracy, and gain immediate insights whenever new data becomes available. This integration not only saves time but also empowers teams to stay ahead in fast-paced environments. Implementing this solution opens up opportunities to make smarter, data-driven decisions with confidence, ensuring that your business remains agile and competitive in a world where every moment counts.